JIT in Prolog

Hi all,

Some news from the JIT front. Progress on the JIT has been low-profile in the past few months. No big results to announce yet, but we have played with some new ideas, and they are now documented as a draft research paper: Towards Just-In-Time Compilation and Specialisation of Prolog.

Prolog? Yes. To understand this slightly unusual choice of programming language, here is first some background about our JIT.

PyPy contains not a JIT but a JIT generator, which means that we only write an interpreter for a language (say, the complete Python language), and we get a JIT "for free". More precisely, it's not for free: we had to write the JIT generator, of course, as well as some amount of subtle generic support code. The JIT generator preprocesses the (complete Python) interpreter that we wrote and links the result with the generic support code; the result is a (complete Python) JIT.

The way that this works so far gives us a generated JIT that is very similar to Psyco in the way it works. But Psyco has issues (and so the current PyPy JITs have the same issues): it can sometimes produce too much machine code, e.g. by failing to notice that two versions of the machine code are close enough that they should really be one; and it can also sometimes fail in the opposite way, by making a single sub-efficient version of the machine code instead of several efficient specialized versions.

A few months ago we have chosen to experiment with improving this instead of finishing and polishing what we had so far. The choice was mostly because we were (and still are) busy finishing and polishing everything else in PyPy, so it was more fun to keep at least the JIT on the experimental side. Besides, PyPy is now getting to a rather good and complete state, and it is quite usable without the JIT already.

Anyway, enough excuses. Why is this about Prolog?

In PyPy, both the (complete Python) interpreter and the JIT support code are in RPython. Now RPython is not an extremely complicated language, but still, it is far from the top on a minimalism scale. In general, this is a good in practice (or at least I think so): it gives a reasonable balance because it is convenient to write interpreters in RPython, while not being so bloated that it makes our translation toolchain horribly complicated (e.g. writing garbage collectors for RPython - or even JIT generators - is reasonable). Still, it is not the best choice for early research-level experimentation.

So what we did instead recently is hand-write, in Prolog, a JIT that looks similar to what we would like to achieve for RPython with our JIT generator. This gave much quicker turnaround times than we were used to when we played around directly with RPython. We wrote tiny example interpreters in Prolog (of course not a complete Python interpreter). Self-inspection is trivial in Prolog, and generating Prolog code at runtime is very easy too. Moreover, many other issues are also easier in Prolog: for example, all data structures are immutable "terms". Other languages than Prolog would have worked, too, but it happens to be one that we (Carl Friderich, Michael Leuschel and myself) are familiar with -- not to mention that it's basically a nice small dynamic language.

Of course, all this is closely related to what we want to do in PyPy. The fundamental issues are the same. Indeed, in PyPy, the major goals of the JIT are to remove, first, the overhead of allocating objects all the time (e.g. integers), and second, the overhead of dynamic dispatch (e.g. finding out that it's integers we are adding). The equivalent goals in Prolog are, first, to avoid creating short-lived terms, and second, to remove the overhead of dispatch (typically, the dispatching to multiple clauses). If you are familiar with Prolog you can find more details about this in the paper. So far we already played with many possible solutions in the Prolog JIT, and the paper describes the most mature one; we have more experimentation in mind. The main point here is that these are mostly language-independent techniques (anything that works both in Prolog and in RPython has to be language-independent, right? :-)

In summary, besides the nice goal of speeding up Prolog, we are trying to focus our Prolog JIT on the issues and goals that have equivalents in the PyPy JIT generator. So in the end we are pretty convinced that it will give us something that we can backport to PyPy -- good ideas about what works and what doesn't, as well as some concrete algorithms.

PyPy code swarm

Following the great success of code_swarm, I recently produced a video that shows the commit history of the PyPy project.

The video shows the commits under the dist/ and branch/ directories, which is where most of the development happens.

In the first part of the video, you can see clearly our sprint based approach: the video starts in February 2003, when the first PyPy sprint took place in Hildesheim: after a lot of initial activity, few commits happened in the next two months, until the second PyPy sprint, which took place in Gothenburg in late May 2003; around the minute 0:15, you can see the high commit rate due to the sprint.

The next two years follow more or less the same pattern: very high activity during sprints, followed by long pauses between them; the most interesting breaking point is located around the minute 01:55; it's January 2005, and when the EU project starts, the number of commits just explodes, as well as the number of people involved.

I also particularly appreciated minute 03:08 aka March 22, 2006: it's the date of my first commit to dist/, and my nickname magically appears; but of course I'm biased :-).

The soundtrack is NIN - Ghosts IV - 34: thanks to xoraxax for having added the music and uploaded the video.

PyPy Codeswarm from solse@trashymail.com on Vimeo.

Cool. There was less of a drop off after the eu project ended than I expected!

Funding of some recent progress by Google's Open Source Programs

As readers of this blog already know, PyPy development has recently focused on getting the code base to a more usable state. One of the most important parts of this work was creating an implementation of the ctypes module for PyPy, which provides a realistic way to interface with external libraries. The module is now fairly complete (if somewhat slow), and has generated a great deal of community interest. One of the main reasons this work progressed so well was that we received funding from Google's Open Source Programs Office. This is really fantastic for us, and we cannot thank Google and Guido enough for helping PyPy progress more rapidly than we could have with volunteer-only time!

This funding opportunity arose from the PyPy US road trip at the end of last year, which included a visit to Google. You can check out the video of the talk we gave during our visit. We wrapped up our day with discussions about the possibility of Google funding some PyPy work and soon after a we were at work on the proposal for improvements we'd submitted.

One nice side-effect of the funding is indeed that we can use some of the money for funding travels of contributors to our sprint meetings. The next scheduled Google funding proposal also aims at making our Python interpreter more usable and compliant with CPython. This will be done by trying to fully run Django on top of PyPy. With more efforts like this one we're hoping that PyPy can start to be used as a CPython replacement before the end of 2008.

Many thanks to the teams at merlinux and Open End for making this development possible, including Carl Friedrich Bolz, Antonio Cuni, Holger Krekel, Maciek Fijalkowski at merlinux, Samuele Pedroni and yours truly at Open End.

We always love to hear feedback from the community, and you can get the latest word on our development and let us know your thoughts here in the comments.

Bea Düring, Open End AB

PS: Thanks Carl Friedrich Bolz for drafting this post.

That's great! I like that this project is getting bigger, growing faster :)

I wish I could help, but don't know where to start :-[

I've been hard on Guido in the past for not throwing more support behind PyPy, and I'm very glad now to hear that Guido (and Google) are demonstrating its importance. Thanks all.

Wow, I am actually more excited by hearing that pypy will be a partial cpython replacement this year than by the google money. Pypy is the most interesting project going on right now in the python world.

Wow, this should be quite interesting.

JT

https://www.Ultimate-Anonymity.com

Congrats. I'm very glad to keep hearing about efforts to make PyPy usable with real-world applications and frameworks. The PyPy project is starting to send out positive signals, and this is something I've been waiting for.

Pdb++ and rlcompleter_ng

When hacking on PyPy, I spend a lot of time inside pdb; thus, I tried to create a more comfortable environment where I can pass my nights :-).

As a result, I wrote two modules:

- pdb.py, which extends the default behaviour of pdb, by adding some commands and some fancy features such as syntax highlight and powerful tab completion; pdb.py is meant to be placed somewhere in your PYTHONPATH, in order to override the default version of pdb.py shipped with the stdlib;

- rlcompleter_ng.py, whose most important feature is the ability to show coloured completions depending on the type of the objects.

To find more informations about those modules and how to install them, have a look at their docstrings.

It's important to underline that these modules are not PyPy specific, and they work perfectly also on top of CPython.

That's pretty impressive, but I think having to modify readline itself in order to do this is a little excessive. readline's completion capabilities are pretty limited. I wonder if there are any better alternatives that could be used with Python.

I have something similar set up for my Python prompt: https://bitheap.org/hg/dotfiles/file/tip/.pythonrc.py -- it allows completion and indentation, it persists command history with readline, and it prints documentation if you try to evaluate certain objects like functions, classes, and methods. It also pretty-prints output, but I'm still trying to tweak it so it's aware of the terminal width.

yes, I agree that having to modify readline is not too nice. I tried hard to avoid this but with bad luck :-/.

I suppose I could try to reimplement readline in Python, but I think it would be too much work; if you are aware of something already done, please let me know :-).

would this work be suitable to inclusion in the standard pdb module?. That would be awesome.

Thanks!

There is readline implementation on top of pyrepl in pypy already :) PyPy by default does not use readline, but just uses this.

Nice job antonio. I'd clean the code up, conform to new-style classes and proper MRO handling. I'd also think about refactoring some of those names and find something better suited. Overall, awesome job man.

This looks great. You've taken a step futher than my own attempts here:

https://aspn.activestate.com/ASPN/Cookbook/Python/Recipe/498182

Two small comments though: it crashes on startup without the right config in ~/.pdbrc.py and once I got it started I see things like this when completing th tab:

^[[000;00m^[[00mtest^[[00m

but syntax highlighting seems to work perfectly. Thanks!

@Stephen: as described in the docs of rlcompleter_ng, to use colorized completion you need to use a patched version of readline, there is no chance to get it working without that.

Could you describe in more details what problem did you encounter with ~/.pdbrc.py, so that I can fix it, please?

Antonio - I created a minor patch for rlcompleter_ng.py which will allow it to run on both Python 2 and 3.

https://mikewatkins.ca/2008/12/10/colorized-interpreter/

Running Nevow on top of PyPy

Another episode of the "Running Real Application of top of PyPy" series:

Today's topic: Divmod's Nevow. Nevow (pronounced as the French "nouveau", or "noo-voh") is a web application construction kit written in Python. Which means it's just another web framework, but this time built on top of Twisted.

While, due to some small problems we're not yet able to pass full Twisted test suite on top of pypy-c, Nevow seems to be simple enough to work perfectly (959 out of 960 unit tests passing, with the last one recognized as pointless and about to be deleted). Also, thanks to

exarkun, Nevow now no longer relies on ugly details like refcounting.

As usual, translate pypy using:

translate.py --gc=hybrid --thread targetpypystandalone --faassen --allworkingmodules --oldstyle

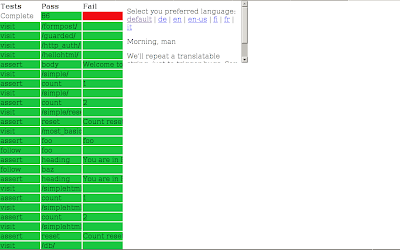

Of course, obligatory to the series, screenshot:

This is Nevow's own test suite.

This is Nevow's own test suite.

Cheers,

fijal

Next sprint: Vilnius/Post EuroPython, 10-12th of July

As happened in the last years, there will be a PyPy sprint just after EuroPython. The sprint will take place in the same hotel as the conference, from 10th to 12th of July.

This is a fully public sprint: newcomers are welcome, and on the first day we will have a tutorial session for those new to PyPy development.

Some of the topics we would like to work on:

- try out Python programs and fix them or fix PyPy or fix performance bottlenecks

- some JIT improvement work

- port the stackless transform to ootypesystem

Of course, other topics are also welcome.

For more information, see the full announcement.

German Introductory Podcast About Python and PyPy

During the Berlin Sprint Holger was interviewed by Tim Pritlove for Tim's Podcast "Chaosradio Express". The whole thing is in German, so only interesting to German-speakers. The PyPy episode can be found here. The interview is touching on a lot of topics, starting with a fairly general intro about what Python is and why it is interesting and then moving to explaining and discussing PyPy. The bit about PyPy starts after about 45 minutes. There is also a comment page about the episode.

Thanks CF for linking - i found it actually a fun interview although i was caught a bit in surprise that it focused first a lot on Python-the-language and i didn't feel in evangelising mode.

And what i again realized is that PyPy is not too well known or understood outside the Python world. Maybe it would help, also for getting some funding, if it were.

It seems a pity non-German speakers cannot benefit from this. Any chance of an English version?

Running Pylons on top of PyPy

The next episode of the "Running Real Applications on Top of PyPy" series:

Yesterday, we spend some time with Philip Jenvey on tweaking Pylons and PyPy to cooperate with each other. While doing this we found some pretty obscure details, but in general things went well.

After resolving some issues, we can now run all (72) Pylons tests on

top of pypy-c compiled with the following command:

translate.py --gc=hybrid --thread targetpypystandalone --faassen --allworkingmodules --oldstyle

and run some example application. Here is the obligatory screenshot (of course it might be fake, as usual with screenshots). Note: I broke application on purpose to showcase cool debugger, default screen is just boring:

Please note that we run example application without DB access, since

we need some more work to get SQLAlchemy run on top of pypy-c together with

pysqlite-ctypes. Just one example of an obscure details that sqlalchemy is

relying on in the test suite:

Please note that we run example application without DB access, since

we need some more work to get SQLAlchemy run on top of pypy-c together with

pysqlite-ctypes. Just one example of an obscure details that sqlalchemy is

relying on in the test suite:

class A(object):

locals()[42] = 98

Update:This is only about new-style classes.

This works on CPython and doesn't on PyPy.

Cheers,

fijal

Very good to see this work! This is a a good thing to be trying and hearing it makes me happy.

We're busy working on making Zope 3 run on Jython, which should get make some of our C level dependencies optional. These make a port to PyPy harder as well. Zope 3 libraries have umpteen thousands of tests that can be run, so that should give one some coverage. The libraries come packaged separately too.

The trickiest part would be those bits that depend on the ZODB. Porting the ZODB to PyPy should allow new possibilities, but it'll be hard too, I imagine.

Hi Martijn,

in fact having zope3 work with pypy would be very nice. i discussed a bit with Phillip and he suggested to first get zope.interface and zope.component to work, then zope.proxy/zope.security. IIRC my first try with zope.interface yielded 3 failures out of 231 tests. I had to hack the test runner a bit to not rely on GC details - i guess that your work for Jython might imply that as well. What is the best way to follow your Jython work, btw?

best & cheers,

holger

The Jython project is a summer of code project. Georgy Berdyshev is the student and is sending messages to jython-dev.

Here was a recent status report:

https://sourceforge.net/mailarchive/forum.php?thread_name=ee8eb53d0806082009g5aec43dbn3da1f35b751cba70%40mail.gmail.com&forum_name=jython-dev

I see that the hyperlink to Georgy's report just now got eaten by the comment software. Here it is again, hopefully working this time.

Georgy Berdyshev is lurking in the #pypy channelg (gberdyshev or similar), FWIW.

Let's see who entered that line:

4361 pje # This proves SA can handle a class with non-string dict keys

4361 pje locals()[42] = 99 # Don't remove this line!

pje ? Yes. That PJE. Complete with "don't remove this!"....we'll have to see what mr. guru was up to with that one. This test is also only present in the 0.5 branch which hasn't had alpha releases yet.

Would love to hear some other examples of "obscure details" the test suite is relying upon...my guess would be extremely few or none besides this one example.

It tests that SQLAlchemy isn't depending on class dictionaries containing only string keys.

Unfortunately, this makes the test then depend on the ability to have non-string keys in the class dictionary. ;-)

The test is to ensure that SQLAlchemy will be able to map objects whose *classes* have AddOns defined.

By the way, as of PEP 3115, the locals() of a class can be an arbitrary object, so making compile-time assumptions about what *can't* be done with a class' locals() is probably not a good idea.

Also, as of every existing version of Python>=2.2, a metaclass may add non-dictionary keys to the class dictionary during class.__new__. So, it has never been a valid assumption that class __dict__ keys *must* be strings. If PyPy is relying on that, it is already broken, IMO.

PJE: What you say is mostly beside the point. PyPy has no problem at all with non-string keys in (old-style) class dicts. The point is more that locals() cannot be used to assign things to this dictionary, see the docs:

"locals()

Update and return a dictionary representing the current local symbol table. Warning: The contents of this dictionary should not be modified; changes may not affect the values of local variables used by the interpreter."

Well, if you plan on supporting, say, Zope or Twisted, you'll need to support modifying class-body frame locals.

There really isn't any point to optimizing them, not only due to PEP 3115, but also due to pre-3115 metaclasses. (And just the fact that most programs don't execute a lot of class suites in tight loops...)

Zope does things like:

frame = sys.getframe(1)

frame.f_locals['foo'] = bar

It does this to make zope.interface.implements() work, among other things. This allows you to the following:

# IFoo is actually an instance, not a

# class

class IFoo(zope.interface.Interface):

pass

class Myclass:

# stuffs information in the class

zope.interface.implements(IFoo)

The martian library (which Grok uses) actually generates this into its directive construct.

Some of this stuff could become class decorators in the future, I imagine, but we're stuck supporting this future for the forseeable future as well.

List comprehension implementation details

List comprehensions are a nice feature in Python. They are, however, just syntactic sugar for for loops. E.g. the following list comprehension:

def f(l):

return [i ** 2 for i in l if i % 3 == 0]

is sugar for the following for loop:

def f(l):

result = []

for i in l:

if i % 3 == 0:

result.append(i ** 2)

return result

The interesting bit about this is that list comprehensions are actually implemented in almost exactly this way. If one disassembles the two functions above one gets sort of similar bytecode for both (apart from some details, like the fact that the append in the list comprehension is done with a special LIST_APPEND bytecode).

Now, when doing this sort of expansion there are some classical problems: what name should the intermediate list get that is being built? (I said classical because this is indeed one of the problems of many macro systems). What CPython does is give the list the name _[1] (and _[2]... with nested list comprehensions). You can observe this behaviour with the following code:

$ python Python 2.5.2 (r252:60911, Apr 21 2008, 11:12:42) [GCC 4.2.3 (Ubuntu 4.2.3-2ubuntu7)] on linux2 Type "help", "copyright", "credits" or "license" for more information. >>> [dir() for i in [0]][0] ['_[1]', '__builtins__', '__doc__', '__name__', 'i'] >>> [[dir() for i in [0]][0] for j in [0]][0] ['_[1]', '_[2]', '__builtins__', '__doc__', '__name__', 'i', 'j']

That is a sort of nice decision, since you can not reach that name by any "normal" means. Of course you can confuse yourself in funny ways if you want:

>>> [locals()['_[1]'].extend([i, i + 1]) for i in range(10)] [0, 1, None, 1, 2, None, 2, 3, None, 3, 4, None, 4, 5, None, 5, 6, None, 6, 7, None, 7, 8, None, 8, 9, None, 9, 10, None]

Now to the real reason why I am writing this blog post. PyPy's Python interpreter implements list comprehensions in more or less exactly the same way, with on tiny difference: the name of the variable:

$ pypy-c-53594-generation-allworking Python 2.4.1 (pypy 1.0.0 build 53594) on linux2 Type "help", "copyright", "credits" or "license" for more information. ``the globe is our pony, the cosmos our real horse'' >>>> [dir() for i in [0]][0] ['$list0', '__builtins__', '__doc__', '__name__', 'i']

Now, that shouldn't really matter for anybody, should it? Turns out it does. The following way too clever code is apparently used a lot:

__all__ = [__name for __name in locals().keys() if not __name.startswith('_') '

or __name == '_']

In PyPy this will give you a "$list0" in __all__, which will prevent the import of that module :-(. I guess I need to change the name to match CPython's.

Lesson learned: no detail is obscure enough to not have some code depending on it. Mostly problems on this level of obscurity are the things we are fixing in PyPy at the moment.

In fairness, the clever code does not depend on the name looking as it actually does in CPython; the clever code merely expects that variables auto-created by Python internals will begin with an underscore. Which is far more reasonable than actually expecting the specific name "_[1]" (and, wow, you're right, that does look weird; you've shown me something I've never seen before about Python!) to turn up in the variable list.

Actually, that piece of code is looking to export only public identifiers, right? It's trying to exclude things prefixed with an underscore that are in the file scope.

I would have said "Lesson learned: when MIT hackers in the 1960's come up with some funny thing called GENSYM, it's not just because they're weird; it really does serve a purpose". But then I'm an asshole Lisp hacker. :-)

anonymous: Using gensym for getting the symbol wouldn't have helped in this case at all. The gensymmed symbol would still have showed up in the locals() dictionary. So depending on whether the gensym implementation returns symbols that start with an underscore or not the same bug would have occured.

Other languages have the capability/design/philosophy to make such implementation details totally unobservable.

Haskell has list comprehensions which expand into normal code. These cannot expose implementation details or temporary names.

turingtest: I agree that that would be preferable, but it's sort of hard with the current interpreter design. Also, it's a pragmatic implementation in that the interpreter didn't have to change at all to add the list comps.

The code's not overly clever, it's ridiculous, because it exactly duplicates the effects of not having __all__ at all. From foo import * already won't import names prefaced with an underscore. Also from the google code search it looks like it's mostly used in Paste, most of the other hits are false positives.

The "from foo import *" case (without __all__ defined) is a good enough reason to match the cpython naming, though, the useless code in Paste not withstanding.

carl: something like GENSYM would still help, since the symbol generated is not accessible from any package.

That's difference between gensym and mktemp. However, I don't believe that python has the concept of uninterned symbols (someone who knows more about python could correct me).

arkanes: no, the "from foo import *" case isn't really changed by the different choice of symbols because the new variable is really only visible within the list comprehension and deleted afterwards. It doesn't leak (as opposed to the iteration variable).

arkanes: This is not the same as not having __all__ defined. __all__ would skip the function _() which is used to mark and translate strings with gettext. In other words, it is emulating the default no __all__ behavior and adding in _()

Carl: doesn't the "$list0" get imported without the all? If not what keeps it from causing a problem normally? Could you not just delete the $list0 variable after assigning it to the LHS?

chris: yes, deleting this variable is exactly what PyPy does (and CPython as well). That's what I was trying to say in my last post.

The bug with the __all__ only occurs because locals is called within the list comprehension. After the list comprehension is done there is no problem.

Better Profiling Support for PyPy

As PyPy is getting more and more usable, we need better tools to use to work on certain applications running on top of PyPy. Out of this interest, I spent some time implementing the _lsprof module, which is a part of the standard library since Python2.5. It is necessary for the cProfile module, which can profile Python programs with high accuracy and a lot less overhead than the older, pure-python profile module. Together with the excellent

lsprofcalltree script, you can display this data using kcachegrind, which gives you great visualization possibilities for your profile data.

Cheers,

fijal

What is the reason you would back-port the Prolog implementation to RPython, and not make Prolog itself the standard language for implementing the JIT?

THat sounds like the great subject of a thesis for Carl. :-)

Congratulations guys.

shalabh: because (hopefully) porting back to rpython is saner than porting all of our interpreter (including modules) to prolog.

A bit unsual approach =)

Hope it'll help...

What about making PyPy useful?

There's still a need for a python compiler, but so far, you can't run standard libraries (eg PyObjC) and you run slow that cPython. -- Even Javascript is faster than you (squirrelfish).

One thing I've never quite understood: how will the JIT-generation transform interact with more traditional optimization schemes?

Concrete example: say in a function I want to perform some algebraic reductions of math operations which will change a lot of the instructions. Since the JIT generation turns the interpreter into a JIT, presumably I have to write the optimization at the interpreter level.

I can see how that could work for the simplest kind of optimizations (special cases should be specialized at runtime after they go green, if I understand the rainbow colour scheme.)

I don't see yet how the more complex optimizations I'd write on static, fixed-type code will look in this context. IIUC at interpreter level I can only access the JIT's observations via tests like "if type(a) == FloatType" which should be filled after they're known-- but that's inside the function itself, and I don't see how to access that information from anything outside.

dsm: This is a two-level approach, corresponding to two levels of optimisations that are useful for dynamic languages like Python: the "high level" is the unboxing and dispatching removing that I describe in the post (which by itself can give something like a factor 50-100 speed-up in the best cases). Traditional "low level" optimisations can be performed on top of that, by optimising the generated code that comes out of the "high level" (and this could give another 2-4x speed-up, i.e. the same difference as between "gcc" and "gcc -O3").

In this Prolog experiment we are only focusing on how to get the high level optimisations.

The references in the paper are not properly numbered -- any idea if it could be fixed?

Michel: Thanks for noticing, it should be fixed.

Could you possibly profit from generating a JIT compiler for Lua (www.lua.org) and compare it to Mike Pall's Lua-Jit (https://luajit.org/)?

While the paper was too difficult for me to understand fully, it was still an interesting read and I appreciate you posting it.

FYI: There is a project called Pyke which adds Prolog-like inferencing to Python. This integrates with Python allowing you to include Python code snippets in your rules.

Don't know if this would be useful, but you can check it out at https://pyke.sourceforge.net.

Shalabh: It's also important to note 3 big benefits of implementing a language in the language itself, or a subset thereof ("turtles all the way down").

(1) Debugging and testing tools for programs written in the language then (hopefully) also work for debugging and testing the language implementation with minimal (or no) modification. This also HUGELY lowers the bar for ordinary users of the language to find and fix implementation bugs. This isn't a fault of Prolog, but 99.99% of Python users won't touch a Prolog debugger with a 10-foot pole.

(2) The largest pool of people most interested in improving the language is presumably the expert heavy users of the language. Forcing them to learn a new language and/or implement the language in a language outside their expertise is a large disadvatage.

(3) The difference between language builtins and user code is reduced. Also, it forces certain powerful constructs to (at times) be exposed in the language when they might otherwise only be exposed in the implementation language. Also, with "turtles all the way down", performance improvements in the language itself also often apply to the language builtins, which increases the benefit of improvements, which is important in the cost/benefit analysis for undertaking the performance improvements in the first place. Having "turtles all the way down" make some optimizations worthwhile that otherwise would be too much trouble to implement.